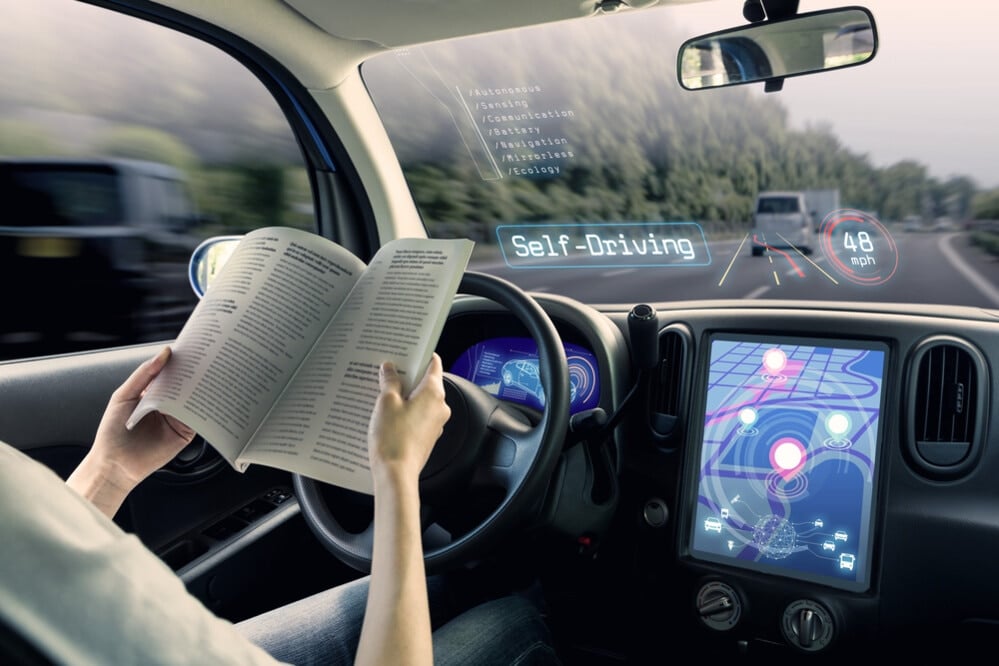

The future of driving doesn’t have humans at the steering wheel. Self-driving cars have come a long way since experiments began on them in the 1920s. 2014 marked the first release of auto-pilot, a Tesla software that featured Autosteer, Autopark, and Traffic-Aware Cruise Control (TACC). While impressive, this is nowhere near fully autonomous and reflects the growing sentiment regarding fully self-driving cars – they’re harder to make than we thought. In June 2016, Elon Musk, CEO of Tesla, predicted that cars would be available that could operate without human supervision in just a few years. Well, it’s been a few years, and it looks like it’s going to be a few more.

Despite the challenges, the benefits of self-driving cars far outweigh the cost to develop them. The number one advantage is safety. Removing any possibility for human error will reduce crashes significantly. Computers can make decisions on a dime and can communicate those decisions to each other instantly. It is fair to say that should we reach a point in society where self-driving cars make up a majority of travelers, traffic jams may be a thing of the past. Hence the race to develop a car that doesn’t require a human driver.

Current Tesla Models can come with the option for a Fully Self-Driving System (FDS), and theoretically, this system can operate independently, but development and safety are still lacking. Instead, this system provides smart driving assistance with a hope for self-driving capabilities to be included in the future. It is no surprise then that Musk is set to release his Full Self-Driving Beta in about a month. A far cry from a fully autonomous car, the software enables Tesla vehicles to virtually drive themselves both on highways and city streets, still requiring constant human supervision and programmed only to function on American roads.

With setbacks regularly presenting themselves, preventing car companies from finding that silver bullet, it seems almost counterintuitive to say that improvements in self-driving software are occurring all the time. Literally. Every turn a car makes running this software, every intersection and traffic jam, it is getting smarter. Learning the intricacies and habits of human drivers. This isn’t limited only to said car but to the entire self-driving fleet, as any information gleaned by the “smart” car is then shared with every other car. Thus the software becomes more intuitive as the cars procure more range and experience. The more data we give, the safer our cars become. Yet, humans are still needed for blind corners or tricky intersections.

The National Highway Traffic Safety Administration (NHTSA) would like to remind you of this as 11 recent crashes involving Tesla Autopilot have sparked a recent investigation. The cause of these crashes is emergency vehicles. All 11 accidents involved emergency stoppages with the Tesla either hitting an emergency vehicle, or a passenger vehicle also in the area. With the investigation likely resulting in engineering analysis, a process by which the administration examines Tesla’s current methods of self-driving including things like car recognition and AI braking habits, this could have broader implications for the self-driving movement. Mainly, is a recall necessary, and are current systems employed by Tesla intuitive enough to be on the road?

While Tesla is the face of self-driving, companies like Waymo and Google are facing the same hurdles. We can clearly see the goal of full autonomy is a few more miles down the metaphorical road. For now, as has always been the case, humans will stick behind the wheel.