Unless you live under a rock, you have likely seen a Deepfake video by now. You just didn’t know it. This budding technology allows the user to replace the likeness of one person with another in digital media. Beyond just videos, this technology can be used to create persuasive and entirely fictional photos, as well as audio from scratch. Look no further than the recent Disney Plus show The Mandalorian. Upon bringing back beloved Star Wars character Mark Hamill as Luke Skywalker, it was revealed that not only was his image altered to look younger using Deepfake, but his voice was also completely synthesized. And while this leap in media technology has been lauded by many, there are those who worry that in the wrong hands it could wreak havoc on the lives of those on screen.

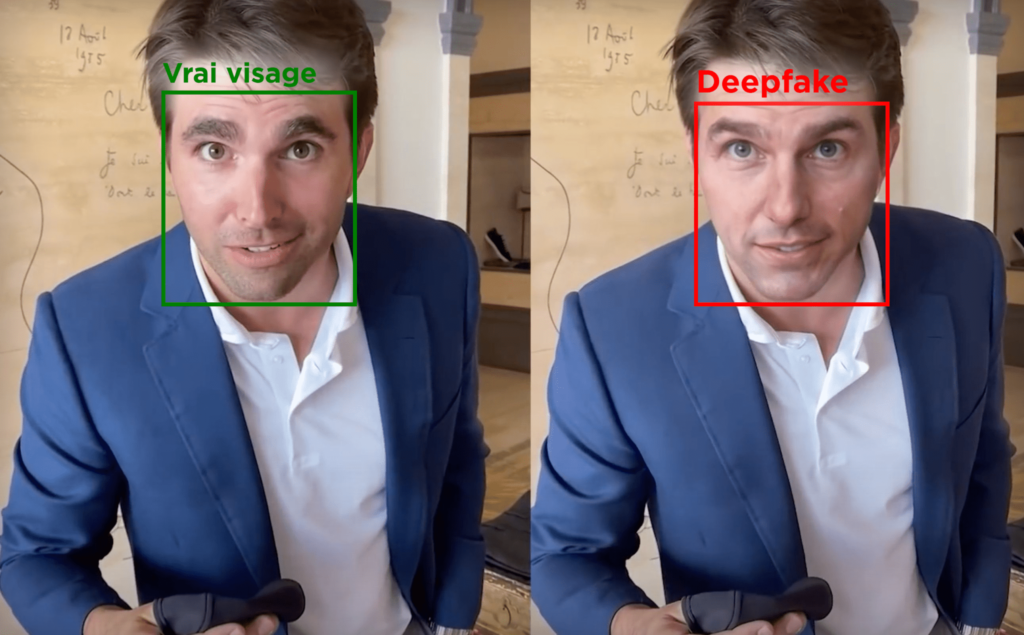

Deepfake works by exploiting two machine learning models (ML). To start, an ML is a file that has been programmed to recognize patterns in code. By giving this file loads of data, the model becomes proficient at finding this code. This is often called training the model over a set of data. With Deepfake, one model creates the fake images by reading multiple sets of data taken from a sample showing the person or thing meaning to be faked, and the other model tests the new product for evidence of forgery. Once it can’t distinguish between the real video and the superimposed one, the work is done. Early iterations largely involved celebrities since there was plenty of sample data available to read.

There are many positive applications to Deepfake. As stated previously, it can be used in media to bring back characters we thought were lost. Mark Hamill was able to reprise his role as Luke because Deepfake made him appear and sound as he did in the original Star Wars movies. Or you could look to Bruce Willis, who made some serious cash allowing for his image to be Deepfaked in Russia for a local mobile network company.

However, you don’t have to be a genius to foresee unwelcome applications of the developing technology. Celebrities and politicians are likely to be the butt of many faked videos in the future. With currently no concrete method of determining the validity of said media, lives could be ruined. More so, we are likely to see anti-trust sentiments grow. If an image can be faked, whether or not it is, the subject can claim it’s a forgery. Accountability will be a thing of the past.

To combat this concerning future scenario prominent companies such as Facebook and Microsoft have partnered with U.S. Universities and the Partnership on AI’s Media Integrity Steering Committee to launch the Deepfake Detection Challenge. An initiative that through the awarding of prizes hopes to motivate software engineers to develop methods to detect whether an AI has been used to alter a video. On the other hand, Jon Favreau, creator of The Mandalorian, suggested chain code might be the optimal security measure in the fight against Deepfakes. A sort of stamp attached to media certifying it hasn’t been altered. No matter the technique, all can agree that it is necessary. Hopefully, we’ll see a future where Deepfake is only used to induce nostalgia, and not to ruin lives.